Joshua Brown: First person killed by an automated vehicle?

On July 1st 2016, the first driver death in an automated vehicle became headline news [1] and caused a nationwide wave of concern. Only a couple of hours earlier, experts have had a controversial roundtable discussion about whether or not they should risk or allow people to get kil-led in beta tests of automated driving functions. Now we have at least one situation in which a controlled automated vehicle system failed to detect a life threatening situation. The question still remains: How can an autonomous system make ethical decisions that involve human lifes? Control negotiation strategies require prior encoding of ethical conventions into decision making algorithms, which is not at all an easy task – especially considering that actually coming up with ethically sound decision strategies in the first place is often very difficult, even for human agents. This workshop seeks to provide a forum for experts across different backgrounds to voice and formalize the ethical aspects of automotive user interfaces in the context of automated driving. The goal is to derive working principles that will guide shared decision-making between human drivers and their automated vehicles.

Technology is (and will be) error-prone

Even in the use of established ADAS technology, there is always a residual risk present. The past has shown that system errors and accidents were mainly caused by unknown system boundaries and limitations [2]. For example, a lot of drivers are uninformed about the fact that an adaptive cruise control (ACC) system does not properly work in stop-and-go traffic or at sharp curvature [7]. Human misjudgments of system capabilities is the reason for numerous accidents. However, past events should not be an excuse to accept fatal accidents for the future. According to the World Health Organization (WHO), there is a stable number of 1.25 million people who are annually killed in road traffic by driving manually. Thus, even if automated driving still carries the risk of fatal accidents, one could put forward the following pragmatic argument: "As long as automated vehicles eventually kill fewer people than are currently killed without automated vehicle features due to human error, the technology should be used".

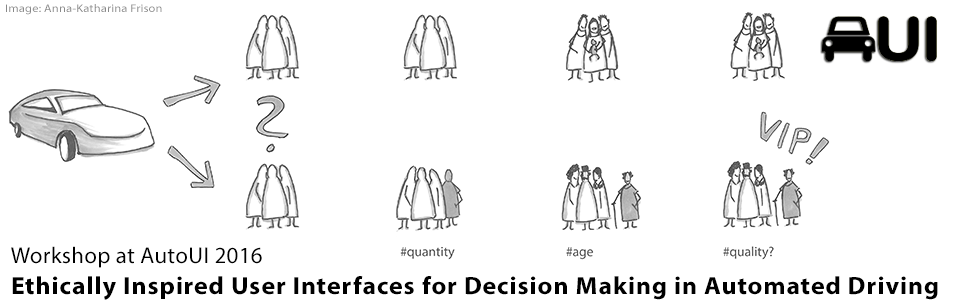

High-level rationalization of human life loss compared to a greater good, however, is hardly in line with the expectations we generally have in future technologies. Aiming at developing machines that can kill people, just not as well as humans do, seems to be an unsatisfactory goal to aim for. Beyond this high-level issue, even more ethical problem areas need to be explored. One of the most elementary ones (and focus of this workshop) is to define clear rules and guidelines that allow for negotiation and conflict resolution between multiple parties (i.e., "driving algorithms" in automated vehicles). The supreme objective would be to define an ethically fair set of rules, based on which all decisions are made even though it might not be possible at all. This leads into two other related questions of similarly elementary nature: "How can these rules and relevant decision-making factors in traffic situations be displayed in a vehicle?" and "Should a machine be allowed to act on (or even enforce) such rules when human lives are on the line?"